2025

CoFeel

Sharing the unique journey of pregnancy through biosignal-driven technology and visual reallty

BCI

VR

Industrial Design

Sharing the unique journey of pregnancy

Pregnancy is a journey filled with emotions, sensations, and connections—yet these experiences are often felt in isolation. CoFeel, seeks to bridge this gap, by translating pregnancy experience into a shared visual and tactile interaction, transforming it into a journey experienced together.

My role in the project

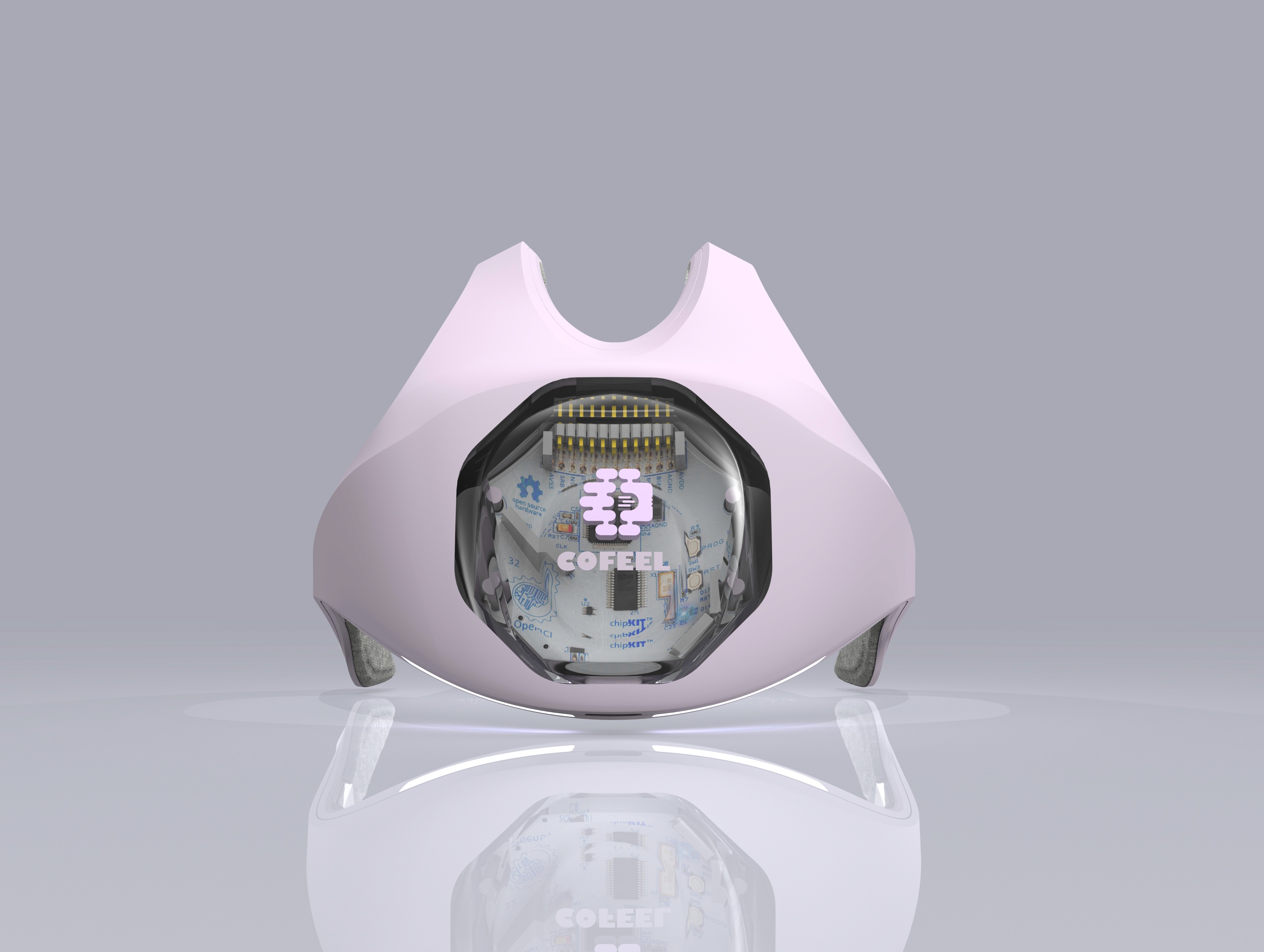

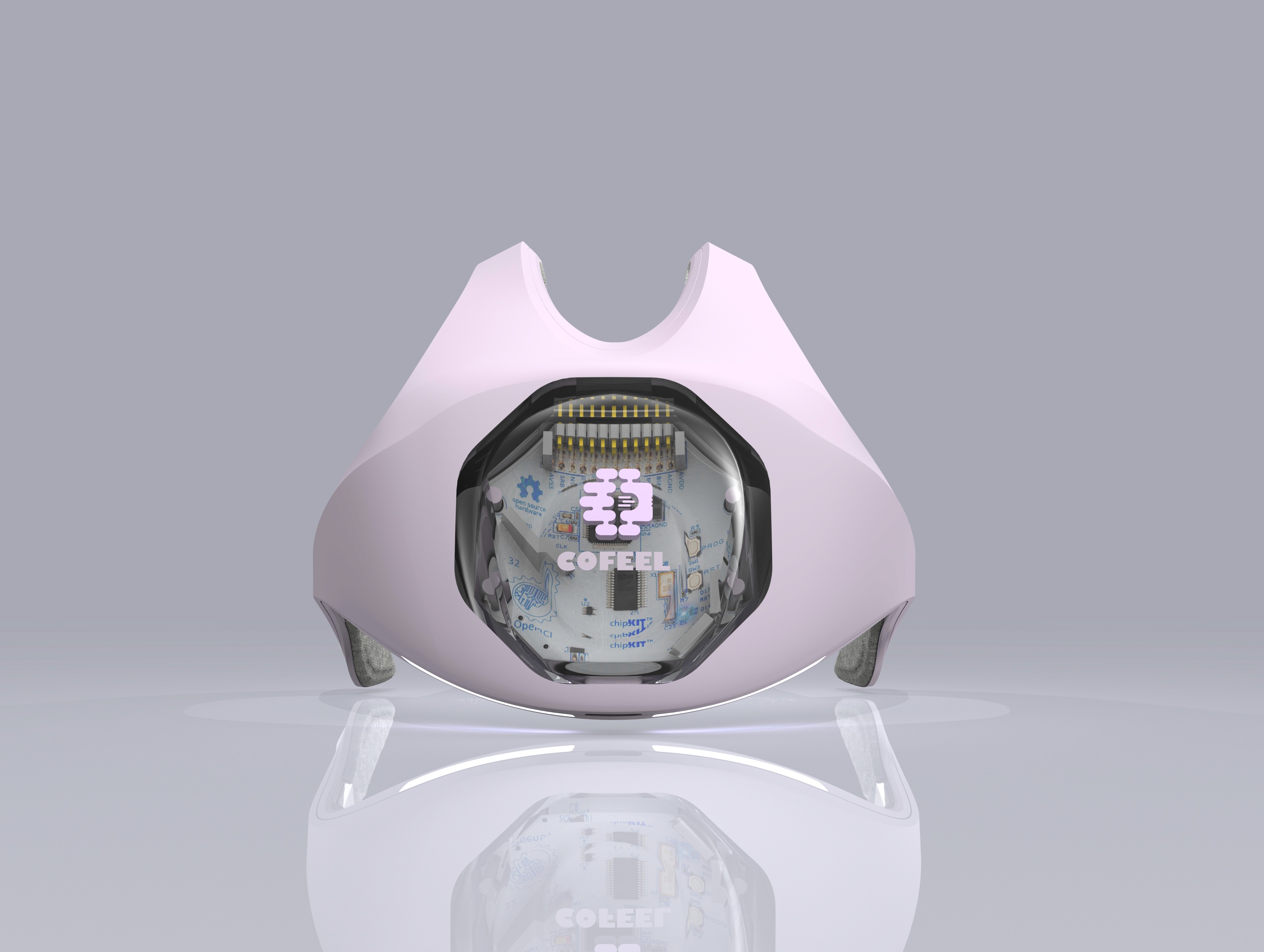

As the designer on a multidisciplinary team of five—including neuroscientists, a Unity backend expert, and a data scientist—I initiated the project idea and brought together collaborators from UC Berkeley, Harvard, and beyond. I focused on the integration of human-centered wearable technology and immersive visualization. Specifically, I designed a soft wearable embedded with EMG sensors on pregnant mom's belly to capture signals from fetus, and a head-mounted EEG sensor setup for maternal brain activity. I also developed the Unity-based visualization interface that interprets the sensor data into a real-time, emotionally engaging experience.

Our project, CoFeel, utilized OpenBCI products and was awarded Grand Finalist and 2nd Place for Best Use of OpenBCI at the MIT Reality Hackathon 2025.

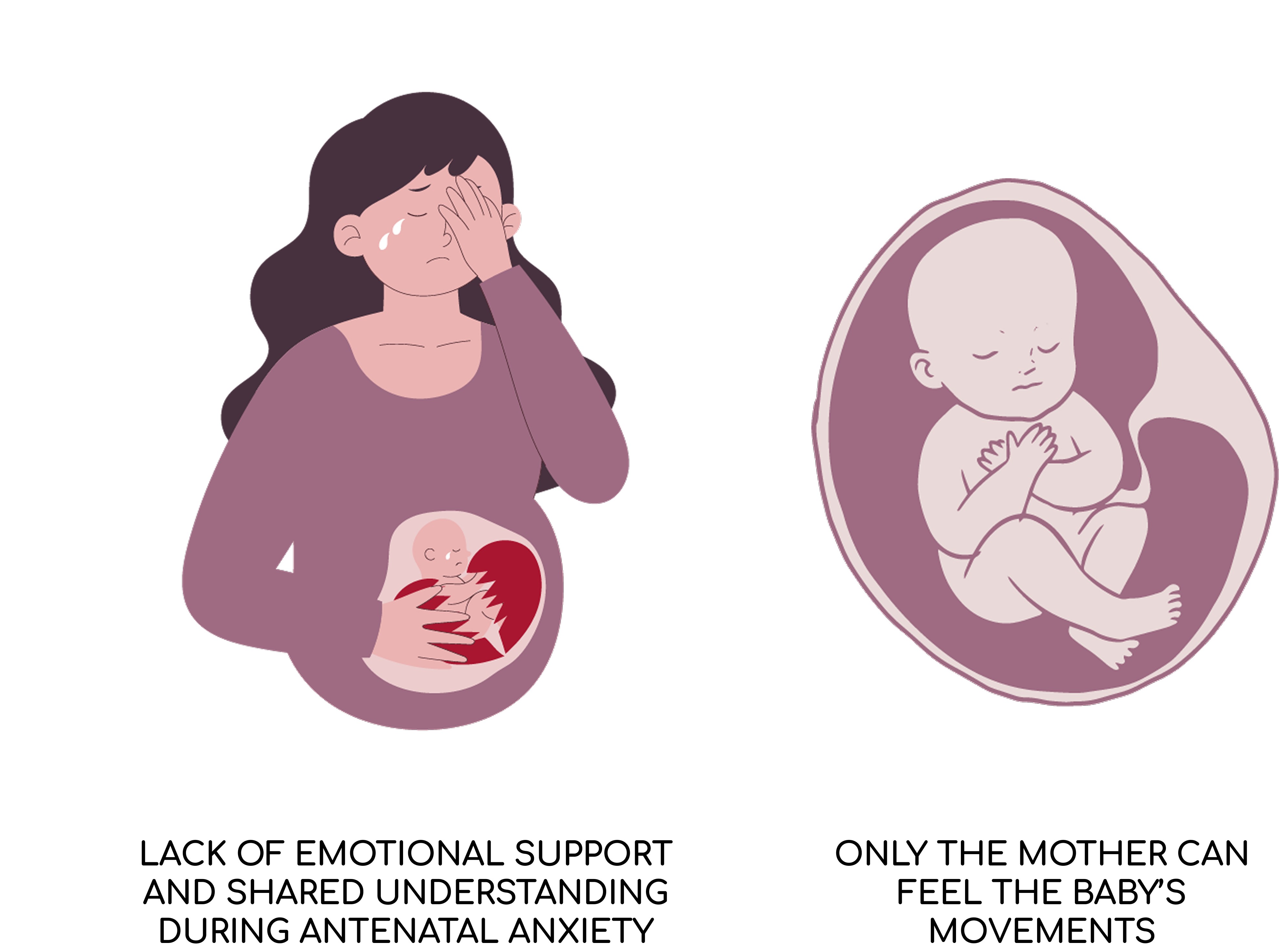

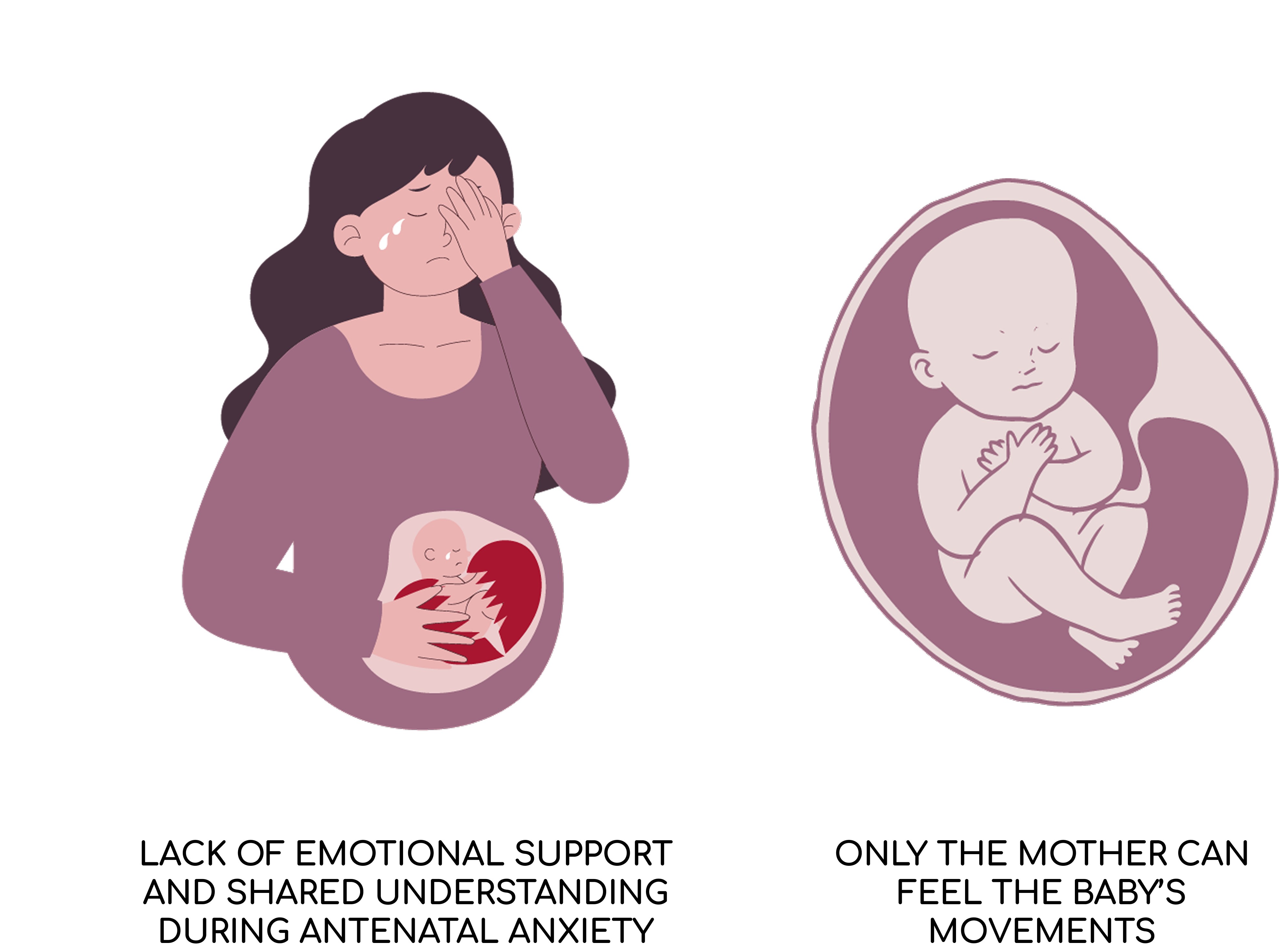

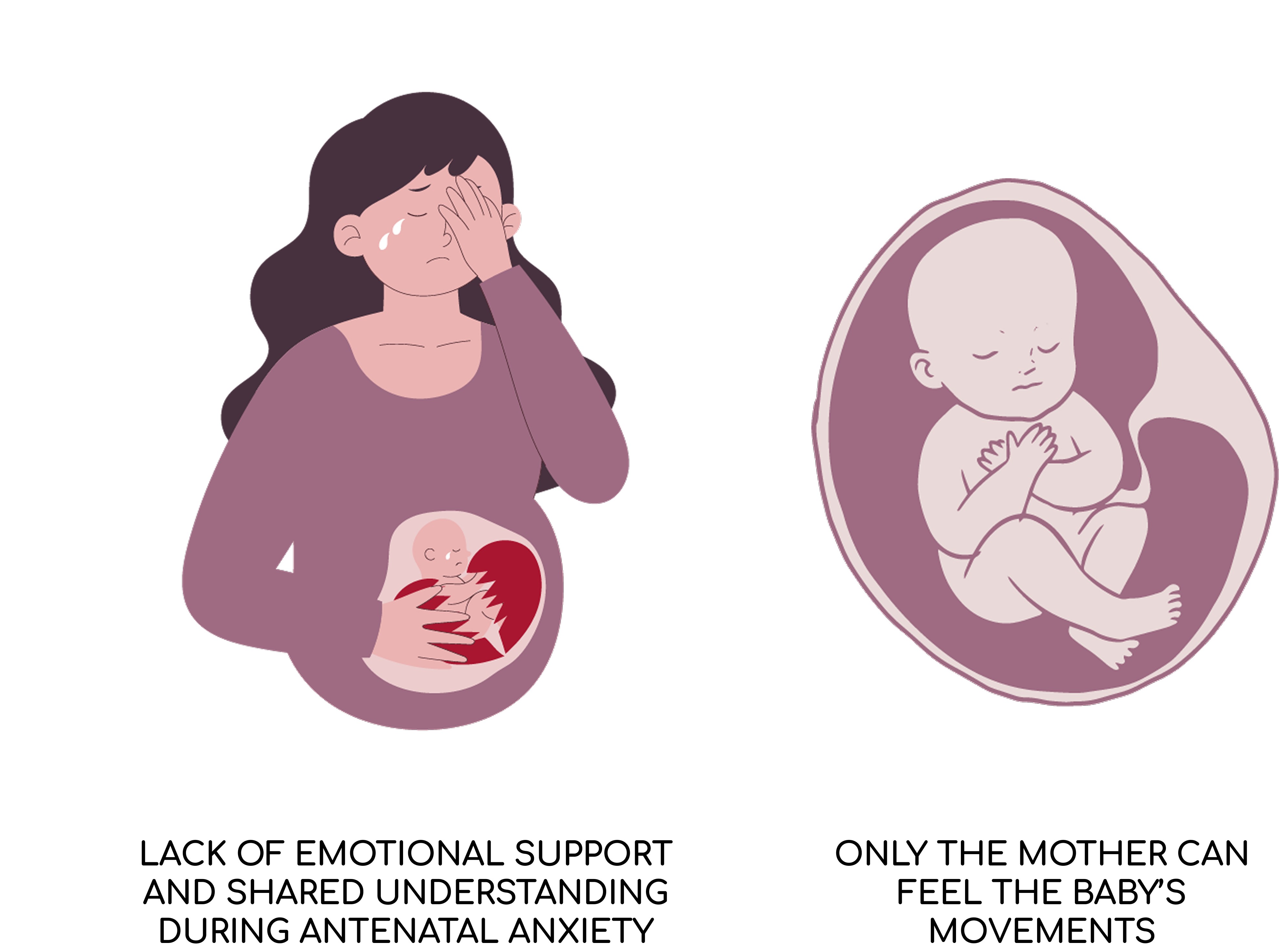

Problem

Pregnancy can be a time of joy — and stress. Globally, the World Health Organization (WHO) estimates that around 10% of pregnant women experience prenatal depression, with rates rising to 15% or higher in low- and middle-income countries.

Dr. Jun Yang, an obstetrician from the Department of Obstetrics & Gynecology, explains: "Pregnancy is a transformative period marked by profound physiological and emotional changes. Beyond physical strain, many women—especially first-time mothers—grapple with anxiety, mood fluctuations, and heightened emotional sensitivity as they navigate this transition. Research shows that negative emotions during pregnancy can impact maternal health, increase the risk of complications, and even influence fetal development. Tools that make emotional states visible and facilitate shared experiences can play a crucial role in enhancing family support, strengthening connections, and promoting a more positive pregnancy journey."

Market and Opportunity

Most pregnancy monitoring products focus almost entirely on the mother, reinforcing the idea that pregnancy is her sole responsibility. Our product challenges this notion.

Solution

CoFeel system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

With VR, the father could be more deeply involved in the pregnancy journey, the mother’s sense of emotional burden and isolation will be reduced. The father’s active participation also helps to ease the mother’s anxiety and increase her emotional security as childbirth approaches.

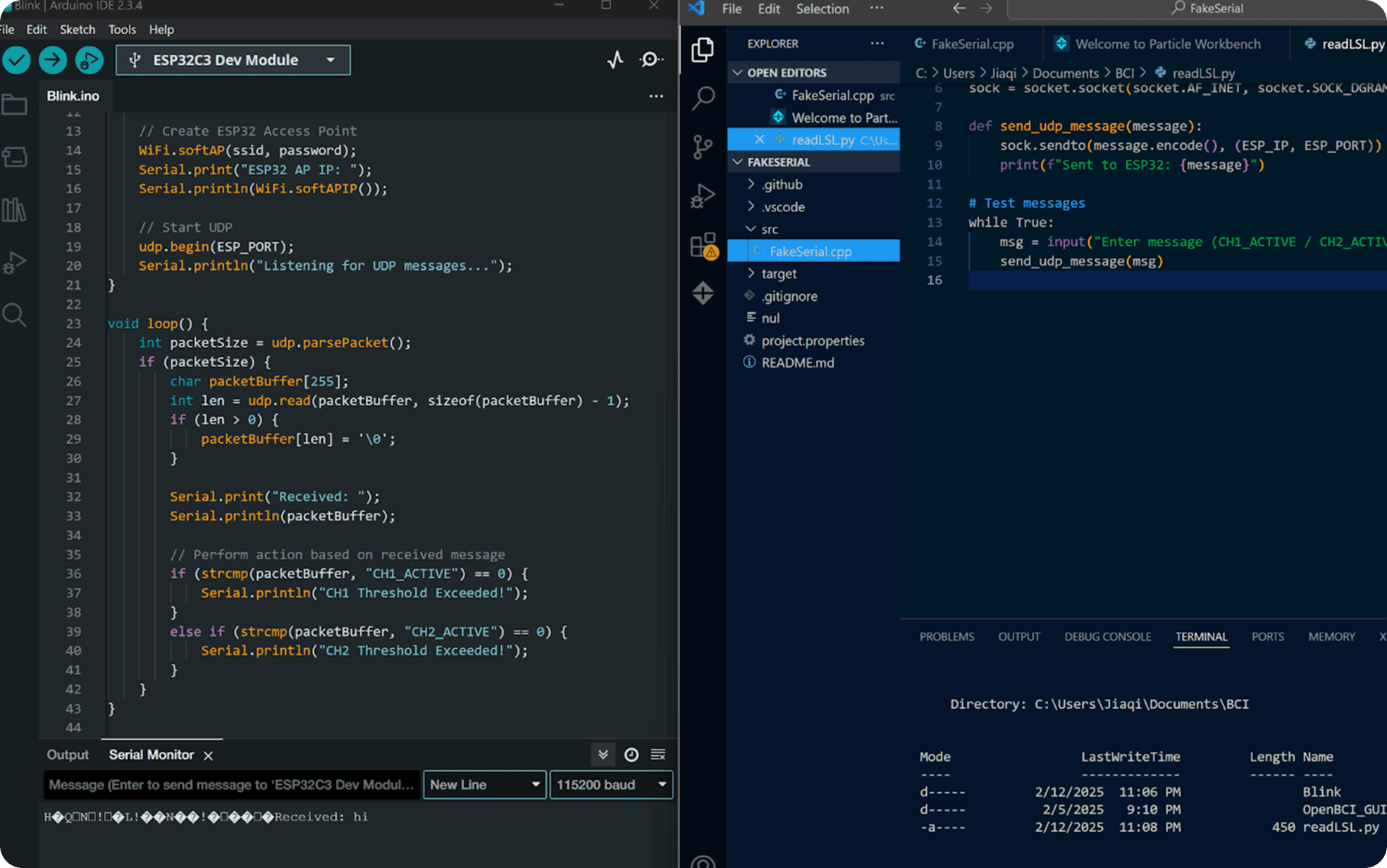

BCI Exploration

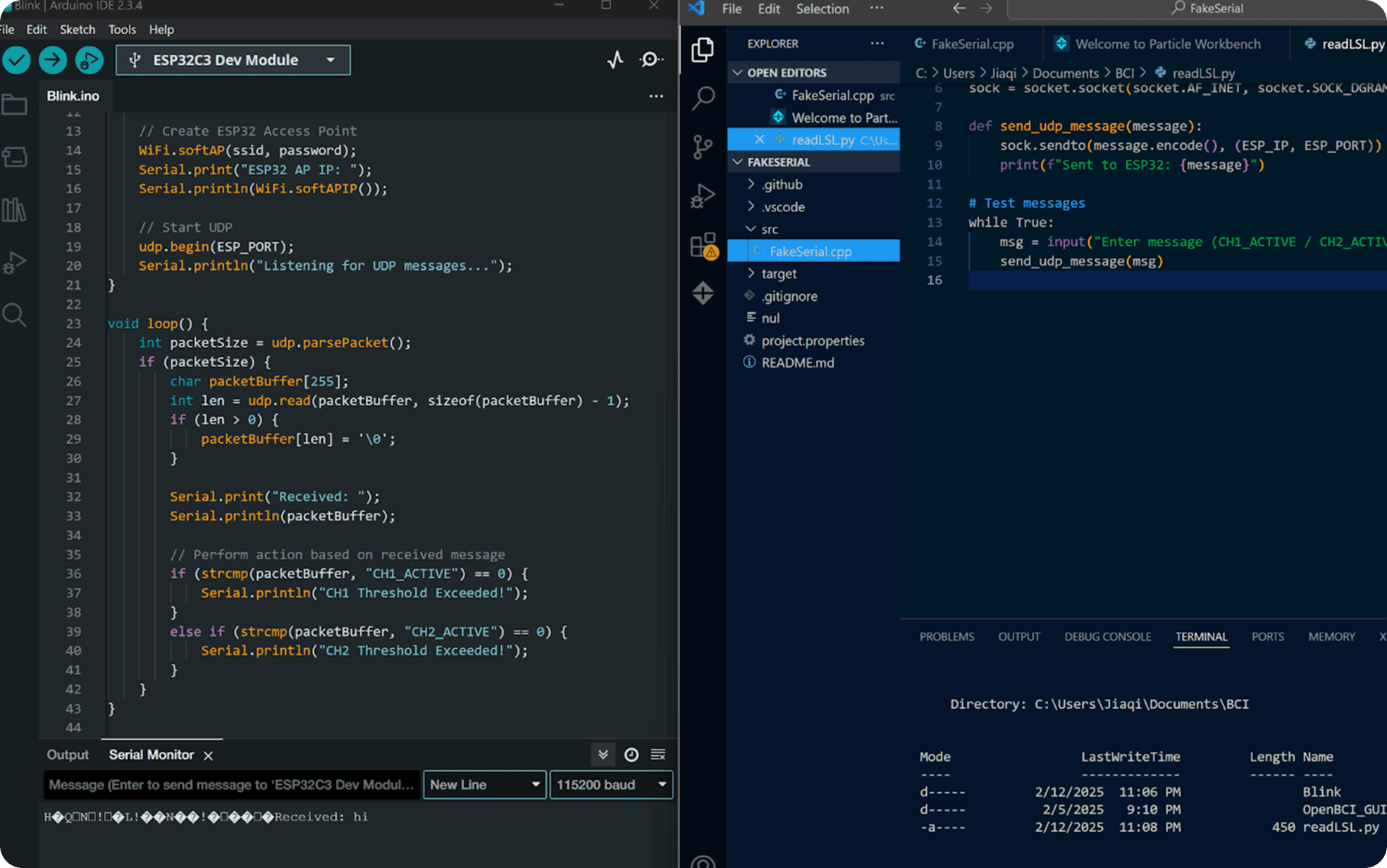

We used two key types of sensors in our product to capture physiological and neural responses: EEG (Electroencephalography) and EMG (Electromyography) sensors.

EEG sensors measure electrical activity in the brain, helping us track emotional and cognitive responses. We focused on the F3, F4, and F regions located on the frontal lobe, which are involved in emotional processing, decision-making, and attention. We also placed sensors on the O1 and O2 regions at the back of the head (occipital lobe). These regions play a key role in emotional regulation and relaxation. Research shows that increased alpha (α) and theta (θ) wave activity in the occipital region is linked to feelings of calmness and reduced anxiety. By monitoring these signals, our system was able to assess the user’s emotional state and visualize the VR environment in real-time.

EMG sensors measured muscle activity by detecting electrical signals generated by muscle contractions. We placed these sensors on the left and right rectus abdominis—the muscles in the abdominal region—to detect physical sensations like muscle tension and simulated fetal movements.

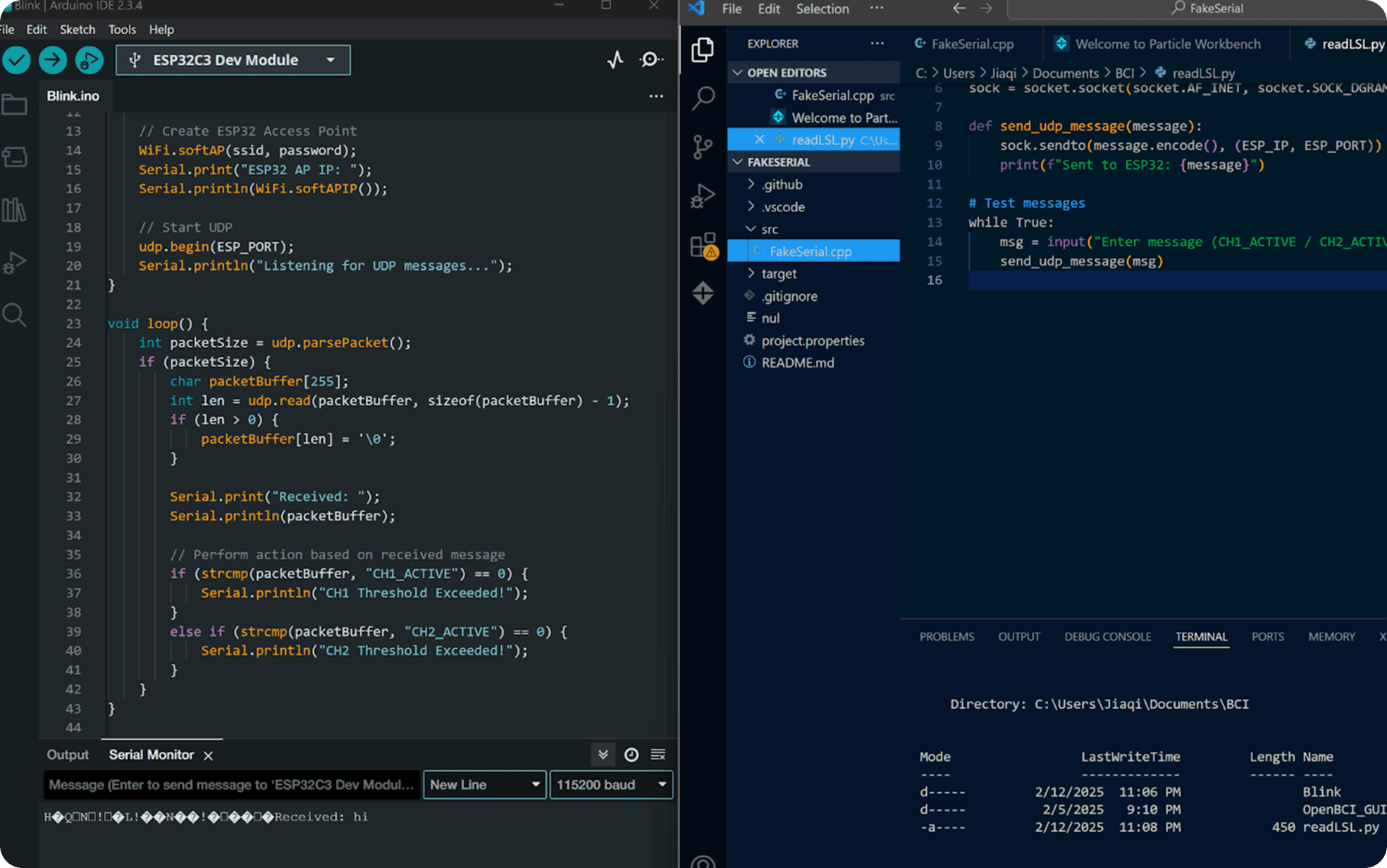

Used OpenBCI_GUI software, and run script in terminal

Connect it to a microcontroller

VR Visualization in Unity

Our system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

VR Interaction

Using EEG sensors, the mother’s emotional state is reflected in VR. If she feels distress, the space dims, creating a subdued atmosphere. Her partner, immersed in VR, perceives these emotional cues and can respond by lighting a symbolic lotus lamp.

Partner's action triggers LEDs on the mother’s wearable, offering a tangible sense of comfort. made by by Jiaqi wang

VR Visualization in Unity

EMG sensors detect fetal movements, allowing the baby’s kicks to be visualized in VR.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

Wearable Interaction

The partner can interact with this digital presence, and their touch generates real-time feedback on the mother’s LED vest as glowing patterns, strengthening the connection between all three—mother, baby, and partner.

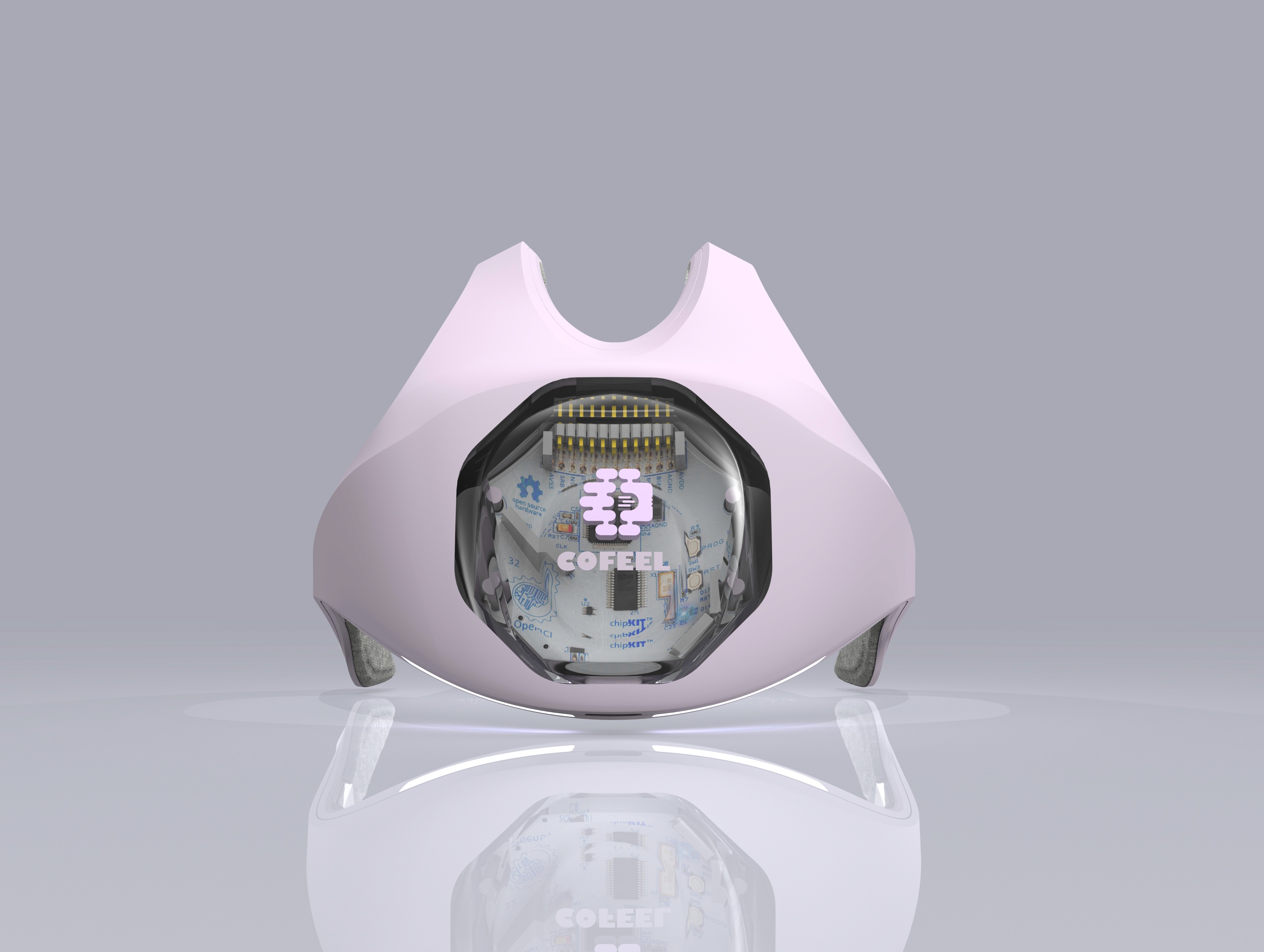

Prototypes

Process and Physical Prototypes

We have made four iterations

Next step

As a next step, we plan to develop a tactile vest for the partner or family member, generating localized vibrations on their abdomen to mirror fetal movements.

By translating the unique experience of pregnancy into a shared visual and tactile interaction, CoFeel fosters deeper emotional connection, transforming pregnancy into a journey experienced together rather than in isolation.

Learnings

System design from BCI to VR

When I joined Reia, it was just a concept—an idea waiting to be developed into a fully functional Web Portal and VR experience that the Clinicians and patients would actually use. One of the most fascinating aspects for me was running A/B tests on different user interfaces. It was incredible to see how even small changes like how progress is visualized or how rewards are framed could affect user engagement and outcomes.

Collaboration

Working closely with engineers, taught me the importance of collaboration and teamwork. Each team had its own priorities, insights, and goals, which often led to spirited discussions where we had to align our visions and find a common path forward. The challenge was not just in sharing ideas, but in listening, compromising, and finding solutions that balanced creativity and technical feasibility. It reinforced for me that great products are born not from individual effort, but from a collective passion and dedication to a shared vision. This informed a foundation for the app's design, guiding decisions throughout the process.

More Works

(GQ® — 02)

©2026

FAQ

01

What tools and technologies used in the project?

02

What is your inpiration?

03

How does the product stand out from the market? What is the result from compatible research?

04

What do you want to improve in the future?

2025

CoFeel

Hi, I am Rosa® I’m a passionate and innovative 3D designer with over a decade of experience in the field. My journey began with a fascination.

BCI

VR

Industrial Design

Sharing the unique journey of pregnancy

Pregnancy is a journey filled with emotions, sensations, and connections—yet these experiences are often felt in isolation. CoFeel, seeks to bridge this gap, by translating pregnancy experience into a shared visual and tactile interaction, transforming it into a journey experienced together.

My role in the project

As the designer on a multidisciplinary team of five—including neuroscientists, a Unity backend expert, and a data scientist—I initiated the project idea and brought together collaborators from UC Berkeley, Harvard, and beyond. I focused on the integration of human-centered wearable technology and immersive visualization. Specifically, I designed a soft wearable embedded with EMG sensors on pregnant mom's belly to capture signals from fetus, and a head-mounted EEG sensor setup for maternal brain activity. I also developed the Unity-based visualization interface that interprets the sensor data into a real-time, emotionally engaging experience.

Our project, CoFeel, utilized OpenBCI products and was awarded Grand Finalist and 2nd Place for Best Use of OpenBCI at the MIT Reality Hackathon 2025.

Problem

Pregnancy can be a time of joy — and stress. Globally, the World Health Organization (WHO) estimates that around 10% of pregnant women experience prenatal depression, with rates rising to 15% or higher in low- and middle-income countries.

Dr. Jun Yang, an obstetrician from the Department of Obstetrics & Gynecology, explains: "Pregnancy is a transformative period marked by profound physiological and emotional changes. Beyond physical strain, many women—especially first-time mothers—grapple with anxiety, mood fluctuations, and heightened emotional sensitivity as they navigate this transition. Research shows that negative emotions during pregnancy can impact maternal health, increase the risk of complications, and even influence fetal development. Tools that make emotional states visible and facilitate shared experiences can play a crucial role in enhancing family support, strengthening connections, and promoting a more positive pregnancy journey."

Market and Opportunity

Most pregnancy monitoring products focus almost entirely on the mother, reinforcing the idea that pregnancy is her sole responsibility. Our product challenges this notion.

Solution

CoFeel system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

With VR, the father could be more deeply involved in the pregnancy journey, the mother’s sense of emotional burden and isolation will be reduced. The father’s active participation also helps to ease the mother’s anxiety and increase her emotional security as childbirth approaches.

BCI Exploration

We used two key types of sensors in our product to capture physiological and neural responses: EEG (Electroencephalography) and EMG (Electromyography) sensors.

EEG sensors measure electrical activity in the brain, helping us track emotional and cognitive responses. We focused on the F3, F4, and F regions located on the frontal lobe, which are involved in emotional processing, decision-making, and attention. We also placed sensors on the O1 and O2 regions at the back of the head (occipital lobe). These regions play a key role in emotional regulation and relaxation. Research shows that increased alpha (α) and theta (θ) wave activity in the occipital region is linked to feelings of calmness and reduced anxiety. By monitoring these signals, our system was able to assess the user’s emotional state and visualize the VR environment in real-time.

EMG sensors measured muscle activity by detecting electrical signals generated by muscle contractions. We placed these sensors on the left and right rectus abdominis—the muscles in the abdominal region—to detect physical sensations like muscle tension and simulated fetal movements.

Used OpenBCI_GUI software, and run script in terminal

Connect it to a microcontroller

VR Visualization in Unity

Our system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

VR Interaction

Using EEG sensors, the mother’s emotional state is reflected in VR. If she feels distress, the space dims, creating a subdued atmosphere. Her partner, immersed in VR, perceives these emotional cues and can respond by lighting a symbolic lotus lamp.

Partner's action triggers LEDs on the mother’s wearable, offering a tangible sense of comfort. made by by Jiaqi wang

VR Visualization in Unity

EMG sensors detect fetal movements, allowing the baby’s kicks to be visualized in VR.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

Wearable Interaction

The partner can interact with this digital presence, and their touch generates real-time feedback on the mother’s LED vest as glowing patterns, strengthening the connection between all three—mother, baby, and partner.

Prototypes

Process and Physical Prototypes

We have made four iterations

Next step

As a next step, we plan to develop a tactile vest for the partner or family member, generating localized vibrations on their abdomen to mirror fetal movements.

By translating the unique experience of pregnancy into a shared visual and tactile interaction, CoFeel fosters deeper emotional connection, transforming pregnancy into a journey experienced together rather than in isolation.

Learnings

System design from BCI to VR

When I joined Reia, it was just a concept—an idea waiting to be developed into a fully functional Web Portal and VR experience that the Clinicians and patients would actually use. One of the most fascinating aspects for me was running A/B tests on different user interfaces. It was incredible to see how even small changes like how progress is visualized or how rewards are framed could affect user engagement and outcomes.

Collaboration

Working closely with engineers, taught me the importance of collaboration and teamwork. Each team had its own priorities, insights, and goals, which often led to spirited discussions where we had to align our visions and find a common path forward. The challenge was not just in sharing ideas, but in listening, compromising, and finding solutions that balanced creativity and technical feasibility. It reinforced for me that great products are born not from individual effort, but from a collective passion and dedication to a shared vision. This informed a foundation for the app's design, guiding decisions throughout the process.

More Works

©2026

FAQ

What tools and technologies used in the project?

What is your inpiration?

How does the product stand out from the market? What is the result from compatible research?

What do you want to improve in the future?

2025

CoFeel

Hi, I am Quinn® I’m a passionate and innovative 3D designer with over a decade of experience in the field. My journey began with a fascination.

BCI

VR

Industrial Design

Sharing the unique journey of pregnancy

Pregnancy is a journey filled with emotions, sensations, and connections—yet these experiences are often felt in isolation. CoFeel, seeks to bridge this gap, by translating pregnancy experience into a shared visual and tactile interaction, transforming it into a journey experienced together.

My role in the project

As the designer on a multidisciplinary team of five—including neuroscientists, a Unity backend expert, and a data scientist—I initiated the project idea and brought together collaborators from UC Berkeley, Harvard, and beyond. I focused on the integration of human-centered wearable technology and immersive visualization. Specifically, I designed a soft wearable embedded with EMG sensors on pregnant mom's belly to capture signals from fetus, and a head-mounted EEG sensor setup for maternal brain activity. I also developed the Unity-based visualization interface that interprets the sensor data into a real-time, emotionally engaging experience.

Our project, CoFeel, utilized OpenBCI products and was awarded Grand Finalist and 2nd Place for Best Use of OpenBCI at the MIT Reality Hackathon 2025.

Problem

Pregnancy can be a time of joy — and stress. Globally, the World Health Organization (WHO) estimates that around 10% of pregnant women experience prenatal depression, with rates rising to 15% or higher in low- and middle-income countries.

Dr. Jun Yang, an obstetrician from the Department of Obstetrics & Gynecology, explains: "Pregnancy is a transformative period marked by profound physiological and emotional changes. Beyond physical strain, many women—especially first-time mothers—grapple with anxiety, mood fluctuations, and heightened emotional sensitivity as they navigate this transition. Research shows that negative emotions during pregnancy can impact maternal health, increase the risk of complications, and even influence fetal development. Tools that make emotional states visible and facilitate shared experiences can play a crucial role in enhancing family support, strengthening connections, and promoting a more positive pregnancy journey."

Market and Opportunity

Most pregnancy monitoring products focus almost entirely on the mother, reinforcing the idea that pregnancy is her sole responsibility. Our product challenges this notion.

Solution

CoFeel system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

With VR, the father could be more deeply involved in the pregnancy journey, the mother’s sense of emotional burden and isolation will be reduced. The father’s active participation also helps to ease the mother’s anxiety and increase her emotional security as childbirth approaches.

BCI Exploration

We used two key types of sensors in our product to capture physiological and neural responses: EEG (Electroencephalography) and EMG (Electromyography) sensors.

EEG sensors measure electrical activity in the brain, helping us track emotional and cognitive responses. We focused on the F3, F4, and F regions located on the frontal lobe, which are involved in emotional processing, decision-making, and attention. We also placed sensors on the O1 and O2 regions at the back of the head (occipital lobe). These regions play a key role in emotional regulation and relaxation. Research shows that increased alpha (α) and theta (θ) wave activity in the occipital region is linked to feelings of calmness and reduced anxiety. By monitoring these signals, our system was able to assess the user’s emotional state and visualize the VR environment in real-time.

EMG sensors measured muscle activity by detecting electrical signals generated by muscle contractions. We placed these sensors on the left and right rectus abdominis—the muscles in the abdominal region—to detect physical sensations like muscle tension and simulated fetal movements.

Used OpenBCI_GUI software, and run script in terminal

Connect it to a microcontroller

VR Visualization in Unity

Our system visualizes both the mother’s emotions and the baby’s movements through a dynamic VR environment in Unity.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

VR Interaction

Using EEG sensors, the mother’s emotional state is reflected in VR. If she feels distress, the space dims, creating a subdued atmosphere. Her partner, immersed in VR, perceives these emotional cues and can respond by lighting a symbolic lotus lamp.

Partner's action triggers LEDs on the mother’s wearable, offering a tangible sense of comfort. made by by Jiaqi wang

VR Visualization in Unity

EMG sensors detect fetal movements, allowing the baby’s kicks to be visualized in VR.

A tranquil setting with still water represents calmness, while shifting weather—sunlight, rain, or clouds—mirrors the mother’s changing mood. by Jiaqi wang

Wearable Interaction

The partner can interact with this digital presence, and their touch generates real-time feedback on the mother’s LED vest as glowing patterns, strengthening the connection between all three—mother, baby, and partner.

Prototypes

Process and Physical Prototypes

We have made four iterations

Next step

As a next step, we plan to develop a tactile vest for the partner or family member, generating localized vibrations on their abdomen to mirror fetal movements.

By translating the unique experience of pregnancy into a shared visual and tactile interaction, CoFeel fosters deeper emotional connection, transforming pregnancy into a journey experienced together rather than in isolation.

Learnings

System design from BCI to VR

When I joined Reia, it was just a concept—an idea waiting to be developed into a fully functional Web Portal and VR experience that the Clinicians and patients would actually use. One of the most fascinating aspects for me was running A/B tests on different user interfaces. It was incredible to see how even small changes like how progress is visualized or how rewards are framed could affect user engagement and outcomes.

Collaboration

Working closely with engineers, taught me the importance of collaboration and teamwork. Each team had its own priorities, insights, and goals, which often led to spirited discussions where we had to align our visions and find a common path forward. The challenge was not just in sharing ideas, but in listening, compromising, and finding solutions that balanced creativity and technical feasibility. It reinforced for me that great products are born not from individual effort, but from a collective passion and dedication to a shared vision. This informed a foundation for the app's design, guiding decisions throughout the process.

More Works

(GQ® — 02)

©2026

FAQ

01

What tools and technologies used in the project?

02

What is your inpiration?

03

How does the product stand out from the market? What is the result from compatible research?

04

What do you want to improve in the future?